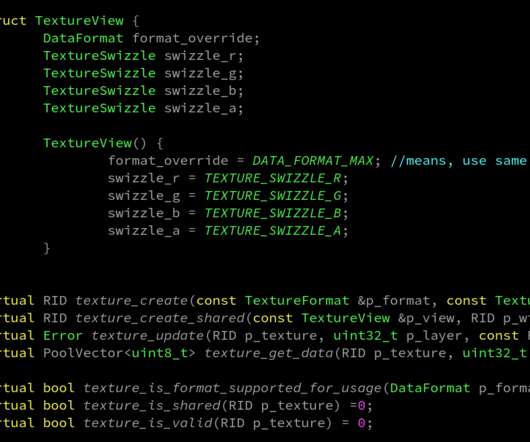

How to get an array of pixel colors from an image?

Cocos

FEBRUARY 28, 2024

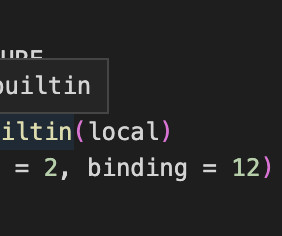

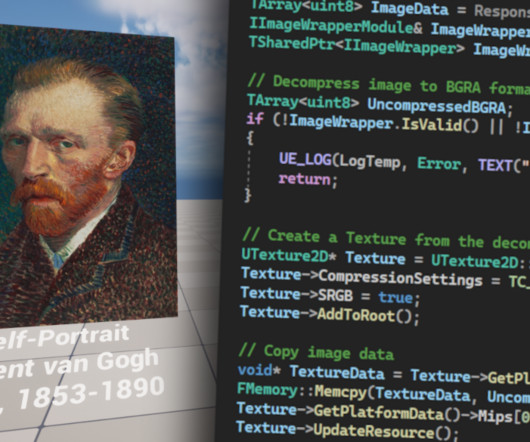

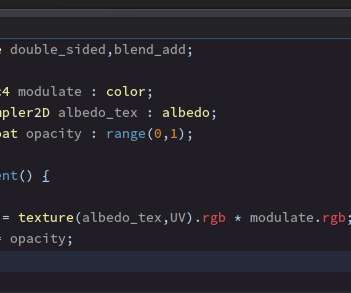

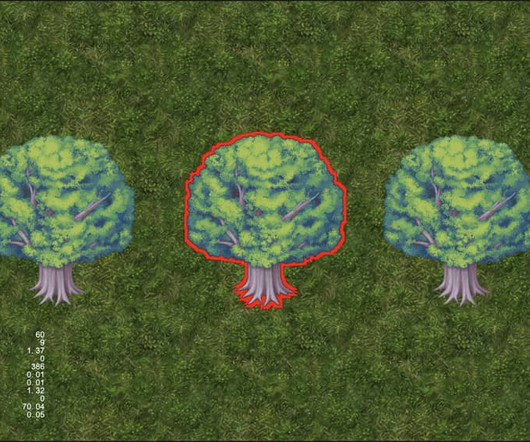

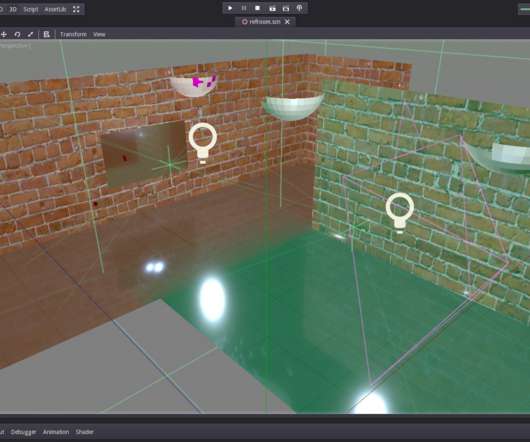

I’m trying to make an effect of pixels appearing with transparency and sequence (first the “darkest” then the rest of the pixels). I want to achieve the effect - “so that the pixels, like water, fill the empty mouth of the river.” But I couldn’t get the array of pixel colors. I’m here to get any help.

Let's personalize your content